In twenty-first-century Silicon Valley, the meaninglessness of the past and the uselessness of history became articles of faith, gleefully performed arrogance. “The only thing that matters is the future,” said the Google and Uber self-driving car designer Anthony Levandowski in 2018. “I don’t even know why we study history. It’s entertaining, I guess—the dinosaurs and the Neanderthals and the Industrial Revolution and stuff like that. But what already happened doesn’t really matter. You don’t need to know history to build on what they made. In technology, all that matters is tomorrow.”Jill Lepore IF THEN: How the Simulmatics Corporation Invented the Future

At the beginning of 2021, as I read Jill Lepore’s book IF THEN: How the Simulmatics Corporation Invented the Future , I was surprised how such a story wasn’t well known despite its strong connections to recent discussions and controversy around data and AI applications. But as the author articulated in the book, I believe one of the main reasons is the prevalent ignorance about tech history and the intentional neglect of past experiences (especially the failures) in the field. For many founders, investors and executives all that matters is the future. The future they know more about, as they claim, because they are the visionaries at the forefront of innovation.

Apparently, anyone involved in designing and developing data intensive and Machine Learning based systems lose a lot by ignoring history, social sciences and other fields. In addition they become more susceptible to repeating mistakes if they don’t learn from the past or question the narratives of those who deliberately ignore it.

In this article I’d like to highlight some patterns and draw some parallels between the story of Simulmatics and the subsequent tech hypes, especially the ongoing Large Language Models (LLMS) hype.

About Simulmatics

Simulmatics as a company was established in 1959 and declared bankruptcy in 1970. The founders picked this name as a mash of ‘simulation’ and ‘automatic’, hoping to coin a new term that would live for decades, which apparently didn’t happen! They worked on building what they called the People Machine to simulate and predict human behavior. It was marketed as a revolutionary technology that would completely change business, politics, warfare and more. Doesn’t this sound familiar?!

As the author explains:

They believed that by simulating human behavior, their People Machine could help the human race avert each and every disaster. It could defeat communism. It could counter insurgencies. It could win elections. It could sell mouthwash. It could accelerate news, like so much amphetamine. It could calm agitated wives. It could win the war in Vietnam by targeting hearts and minds. It could predict race riots, and even plagues. It could end chaos. The scientists of Simulmatics believed they had invented “the A-bomb of the social sciences.”1

Despite having no solid product, Simulmatics founders were selling a vision. They raised funds, signed contracts and had an IPO with no proof of success. This pattern could be observed over and over with every hype in the tech world. In most of the cases, the hype creators and supporters benefit in a way or another, harm other groups and hardly bear any serious consequences.

So let’s pick some themes from the Simulmatics story that are relevant to the present!

1- Data Collection/Annotation and Ghost Work

In the case of Simulmatics, a significant portion of the data curation and annotation work was given to the wives and kids of the company’s founders. Clients thought there was an advanced “coding” technique behind the data labeling work.

There was no television in their house, but McPhee charged his children with collecting all the TV Guides from all the other houses and marking them up: coding. Simulmatics needed data for Media-Mix and, in particular, data about television schedules. This assignment—preparing a report called “Preliminary Codes for Television Program File (Media Mix)”—fell to McPhee, who passed it on to his children. But as one of the company’s clients complained to Greenfield, “there is a serious question as to whether your coding equipment could be sensitive to a sufficient degree to have any real value.” That coding equipment? That coding equipment was Wendy and Jock.1

Now, with OpenAI and ChatGPT many users think that filters and rules related to toxic and controversial topics are applied automatically by the system without inputs from humans. After all, it is the “path to AGI” as OpenAI advertizes, so for users it is a logical conclusion that the system is smart enough to fix its problems. But it is not a secret that underpaid annotators are powering this filtering and they are rarely mentioned in the hype-driven articles.

2-Bias and Representation in Data

In 1962, as the Times (currently The New York Times) hired Simulmatics “to help run its coverage of the [midterm] election”1, things were chaotic and lots of deficiencies appeared in the operation of hardware and software by Simulmatics. Harold Faber, the newspaper’s daily assignment editor, was skeptical about their ability to deliver any value. At the end he was right, as the involvement of Simulmatics was a complete mess in the Newsroom.

One important observation from Layhmond Robinson Jr: “the Time’s best-informed reporter on the African American vote”1 was that only one data point out of 350000 black voters in New York City was used.

“I was surprised to learn, on Election night, from Mr. Pool and another of the Simulmatics men, that they were using only one Negro checkpoint in New York city, where about 350,000 of the state’s 400,000 Negro voters live,” Robinson later told Faber. “This checkpoint was on 109th Street, which is not an all-Negro area but one populated by a mixture of whites, Negroes and Puerto Ricans.” It was ridiculous. “Unless we can do a better job on the Negro vote next time around I think we ought to forget it and stop confusing our readers,” Robinson told Faber. “This time it was a flop.”1

Even people in the 60’s saw how collecting data is a design choice and why having access to inspect this data was important. One might think that we are past this, after tens of years of research and development. But every day we see examples of disproportionate representation in data and lack of diversity in the groups controlling the curation process.

3- Underestimation of Competent Practitioners and Researchers

On the same midterm election night in 1962, the Simulmatics guys were overconfident. They thought they had everything figured out because they had the super duper machine! But Faber described them as amateurs who were underestimating competent technicians, that happened to be, guess what? women of course!

Faber called Greenfield in a fury and told him “his men were running an amateur night.” Simulmatics, Faber said, “frankly, not only underestimated, but completely misjudged the importance of competent technicians.” IBM stepped in, promising to deliver a number of “girls” on Election Night, since they, unlike the men of Simulmatics, actually knew how to operate all the equipment.1

Now with the LLMs and AI hype, it is very common to see executives, investors and even scientists, underestimating researchers or practitioners whose work is essential to the developments in the field of AI. They would undermine the work of linguists, social scientists, designers, engineers and anyone who is not in their echo chamber. But when things get messy, they start to seek their skill set or hijack their fields. For instance, they would take a concept like “AI Safety” and use it for totally different irrelevant purposes.

4- Absence of A Rigorous Scientific Methodology

As Simulmatics took more projects, anyone working with them could see the flaws in their approaches and the lack of any rigorous methodology.

4-1 No Solid Theory

Van Vleck was a PhD student who worked at MIT on Pool’s project ComCom to “build a simulated population of Communists”1 in order to predict their response to different political messages. He said he couldn’t see how this model would “produce any meaningful prediction when it did not rest on any known theory of human behavior”1. But he was an undergrad and needed money so he couldn’t argue more!

Imagine how many people nowadays work at labs and corporations on AI related projects that are hype-driven, harmful or useless. In many cases the ones who raise concerns get alienated or punished because they dared to go against the current and spoke to power. On the other hand, many like Van Vleck feel they have no choice except for going with the flow and giving their managers what they want. Either they decide that is how they would survive and build a career or they are stuck for a reason or another.

4-2 Violation of Scientific Methodology

During Simulmatic’s involvement in the Vietnam War, Ithiel de Sola Pool: one of the main figures of the company who was a quantitative behavioral scientist, wanted to evaluate “the effectiveness of television as a tool for counterinsurgency”1. A lieutenant at ARPA who had a PhD in Psychology “worked with him on the project and entirely lost faith in him as a scientist”1. Another military official stated that it “sounded as though someone had taken a book of rules about scientific methodology, then systematically violated each one”1

Now with OpenAI’s ChatGPT, we can see several systematic violations for scientific methodologies, on top of which comes:

-

training data contamination as explained by Arvind Narayanan and Sayash Kapoor in their article GPT-4 and professional benchmarks: the wrong answer to the wrong question.

-

lack of valid and rigorous evaluation methodologies as highlighted by Melanie Mitchell in her posts Did ChatGPT Really Pass Graduate-Level Exams?

Nowadays, it seems that no matter how much critics talk about these points, the developers of such systems either don’t see the big issue, consider any criticism as opposition to development or expect free public service to test and evaluate their models.

For instance, when Meta’s Galactica was criticized and pulled back, Yann Lecun stated that the developers took it back to “protect their own mental well-being”* because they were “distraught by the vitriol on Twitter”. He saw the public criticism as an attack that impeded progress!

No claim has been walked back.

— Yann LeCun (@ylecun) November 18, 2022

But the team who built Galactica was so distraught by the vitriol on Twitter that they decided to take it down.

So, progress towards a system that "stands up to scrutiny" has paused.

Is that good?

5- Technology for Oppression

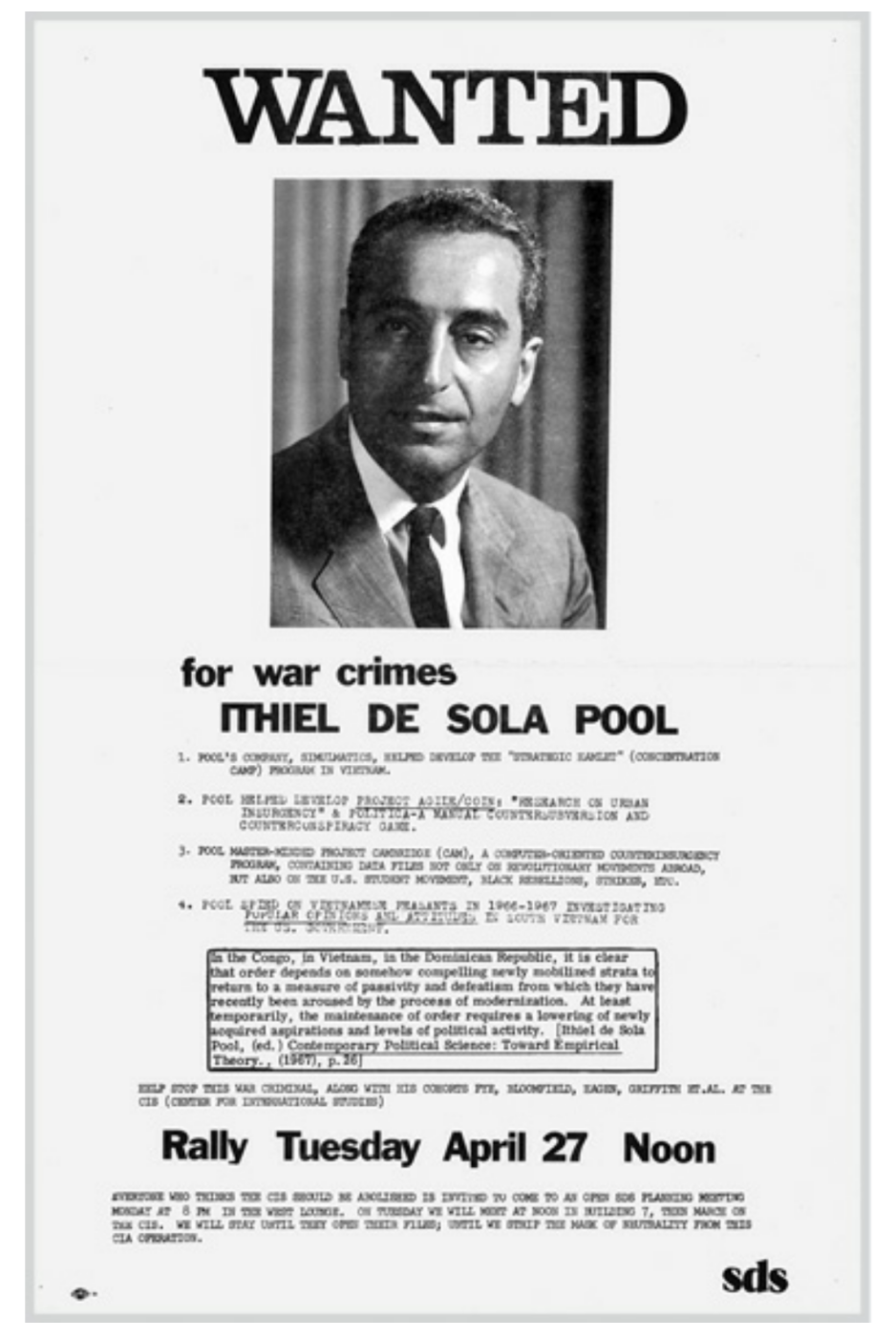

Despite the multiple failures of Simulmatics, they were getting into more collaborations with the military and government. They just cared about the profit without any moral ground. As a result, they got involved in the Vietnam war and worked on a counterinsurgency program, to collect data “not only on revolutionary movements abroad, but also on the U.S. student movement, black rebellions, strikes”1 and more! After the Collapse of Simulmatics, Pool pursued other projects focused on linking and collecting data for different purposes.

But even at the point, people remembered Simulmatics and Pool’s role in supporting oppression. Students at MIT rallied against him, called him a war criminal, and got distinguished faculty members to join them in opposing Pool’s projects at MIT.

Nowadays, we know that tech companies also run after military and government contracts. In some cases the employees know and protest and in other cases such contracts are hidden. For instance, we recall the pushback against Project Maven, and now the No Tech For Apartheid call against Project Nimbus.

With the current LLMs hype, I can imagine lots of agreements being signed to deliver exclusive products for governmental entities. Eventually, tech companies would make money even if their products were a total failure and people will bear the consequences.

So What Can We Learn from These Parallels?

Looking at these recurring patterns in the tech world it should be obvious to everyone that we are not experiencing a totally unique event.

-

On one hand, there has always been groups that tried to concentrate power and wealth while presenting their so-called innovations as revolutionary and beneficial to the world. They appealed to authority, inflated the capabilities of their products and supported oppression.

-

On the other hand, there were the persistent voices who questioned the scientific methodology, opposed the use of flawed products and protested against the technology of oppression.

It is definitely a tedious and draining task for those who keep pushing back and raising awareness. So hopefully more people learn from history, not to fall prey for the hype, and join forces to amplify the reasonable voices!